From Scorecards to Strategy: Using Coalition Health Data to Guide Support and Change

Overview

No matter the data source, it is only useful if it changes what people do.

The Coalition Health Diagnostic Tool was designed to make collaboration visible. Its real value is not in the scorecards themselves, but in how leaders and backbone staff use those insights to decide where to focus attention, how to support working groups, and when to intervene.

What follows is a practical way to interpret coalition health data and use it to guide support, alignment, and change. If you haven’t already read the grounding piece, Coalition Health Diagnostic & Governance Framework, it provides helpful context for what comes next.

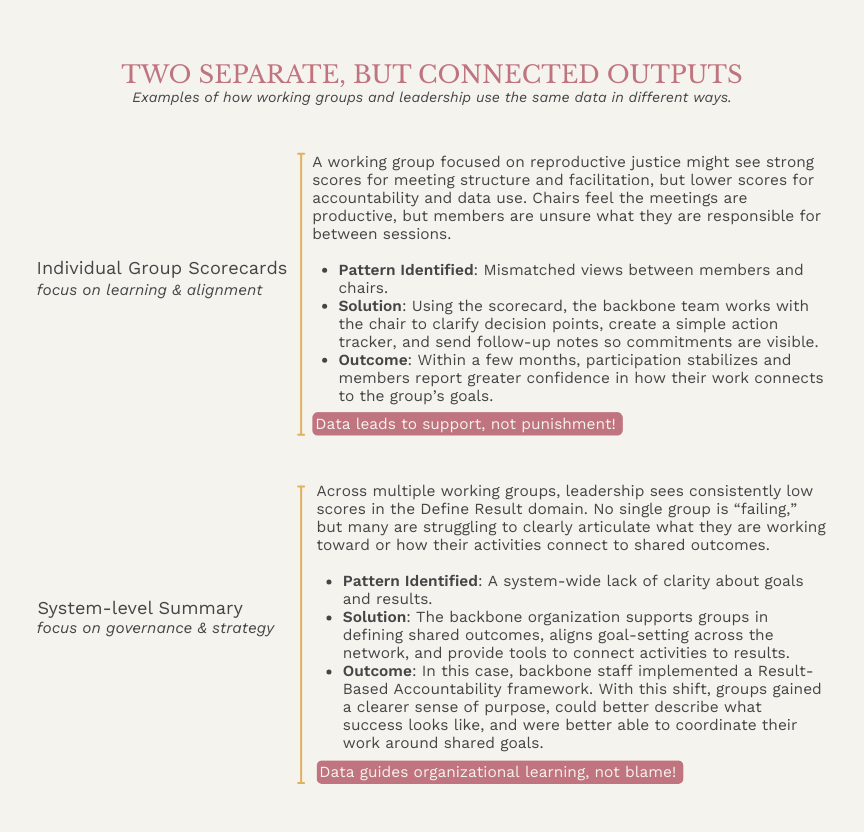

Two Views, Two Purposes

The tool produces two kinds of insight:

Individual Coalition Scorecards, which helps each group understand its own strengths, tensions, and opportunities for growth.

Cross-Coalition Summary, which allows leadership and staff to see patterns across the system and decide where additional facilitation, redesign, or additional support is needed.

Group-level scorecards focus on learning and alignment.

They give a working group a clearer picture of how its collaboration is actually being experienced. By bringing together input from chairs, members, and backbone staff, the scorecard surfaces where people are on the same page and where they are not. Alignment builds confidence. Gaps create useful questions. Those differences become an entry point for conversation, reflection, and adjustment. Used well, group-level scorecards create a shared language for how the group works, making it easier to clarify roles, strengthen facilitation, and improve follow-through.

System-level summaries focus on governance and strategy.

The cross-coalition view shifts attention from any one group to the health of the system as a whole. It allows leadership to see patterns across working groups, distinguish one-off challenges from structural ones, and decide where support or change is most needed. Instead of asking which groups are doing well or poorly, this view supports more useful questions: Where does the system need more facilitation? Where are goals or data practices misaligned? Where would a change in structure, leadership, or resourcing have the greatest impact? In this way, the summary becomes a tool for stewarding collaboration over time, not just measuring it.

These two views serve different purposes and should not be used in the same way. Confusing the two can lead to defensiveness or inaction!

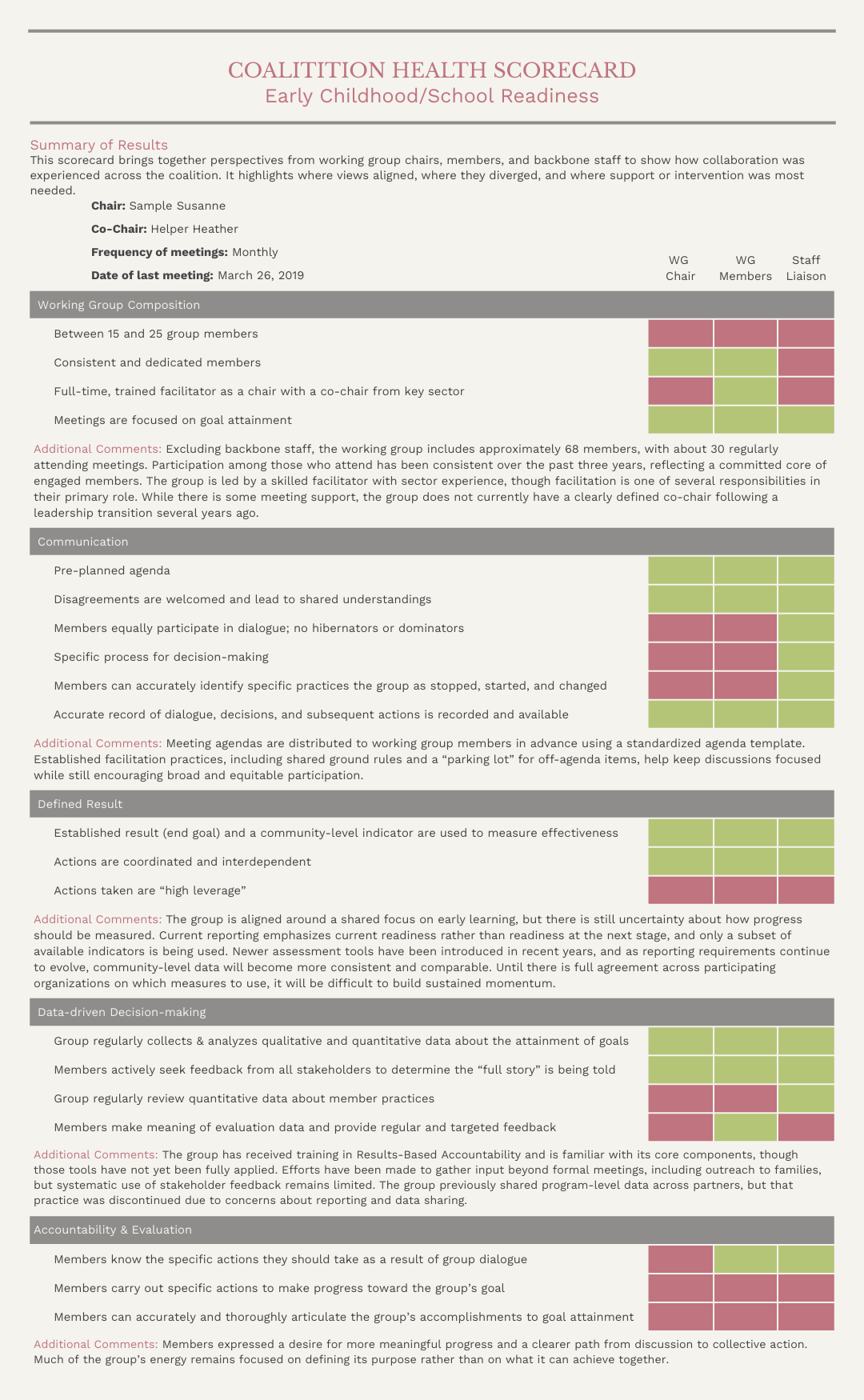

How to Read a Working Group Scorecard

Remember, a working group scorecard is not a report card. It is a way of seeing how collaboration is actually experienced.

Each row represent a different vantage point within the system. Chairs, members, and backbone staff are working toward the same goals, but they do not always experience the work the same. When those perspectives diverge, the difference is often more important than the score itself.

Here are some common patterns to look out for:

Chairs rate domains higher than members

This typically indicates the chairs believe meetings are productive, while members are less clear about the decisions made and how those decisions are translated into action. It points to the need for clearer communication (maybe more detailed prep materials), stronger facilitation (help create a concrete decision-making structure, like Fist to Five), and more explicit follow-through (sending out a recap or meeting notes). This is not necessarily a failure of group leadership. Staff need to offer coaching and practical tools; Chairs need to be open to feedback and listen to staff suggestions; and Leadership needs to ensure chairs have the time and support to lead effectively.Members rate domains higher than staff

This usually means the group feels energized and connected with each other, but not yet aligned with the backbone’s broader strategy, data practices, or expectation. When you see this pattern, it signals that staff and chairs need to work together to translate this excitement and momentum into coordinated and durable action. Staff need to help translate activity into measurable reports and shared reporting; Chairs should clarify priorities so effort is aligned with system-level goals; and Members continue doing the work, but be more explicit how their work connects to shared outcomes.Scores are low across all perspectives

When chairs, members, and staff all rate a domain poorly, the issue is rarely about effort. It is about structure. The group may lack a clear purpose, effective leadership, or the right mix of partners. Staff need to bring options additional options to the table, including the option of sunsetting the group or rebuilding; Chairs be explicit what isn’t working and explore constraints while advocating for additional resources; and Members share openly where work is breaking down and what support they need in order to contribute meaningfully.

These patterns are not always indicators of a strong or weak group. They offer a way to understand where responsibility, structure, and support need to be adjusted. When coalition health is read this way, it becomes a way to care for the system and the people within it.

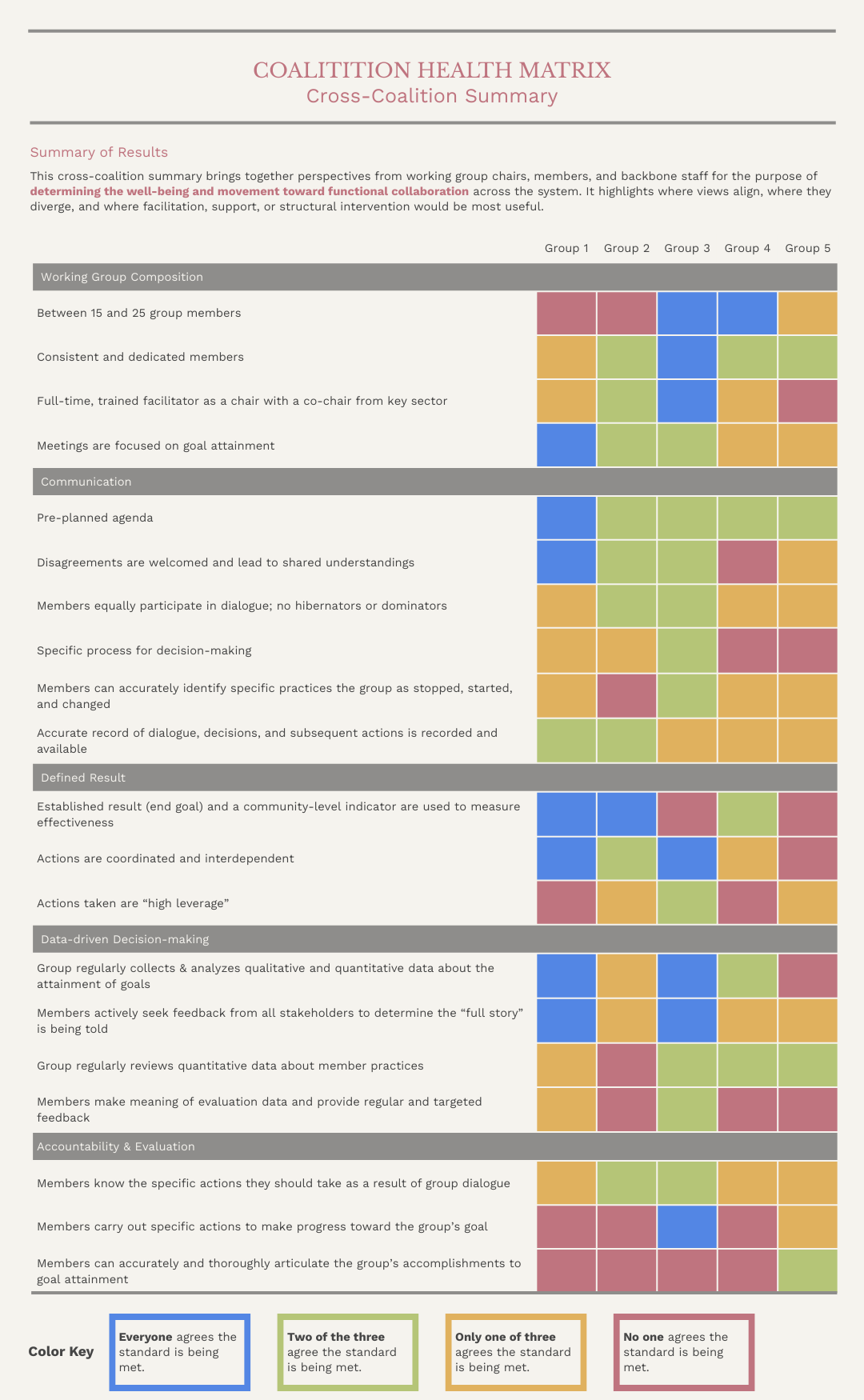

How to Read the Cross-Coalition Summary

The cross-coalition summary answers a different question:

Where is the system healthy, and where is it under strain?

This view should not be used to compare groups to one another. It is about understanding the system is working as a whole. Patterns across multiple working groups are far more important than any single score.

When reviewing the cross-coalition summary, leadership should look for things: (1) clusters of low scores in the same domain across several groups, (2) recurring misalignment between chairs, members, and staff, and (3) signals that point to backbone-wide changes rather than group-level fixes.

In the example shown, several groups struggle with aspects of the Evaluation & Accountability domain. Instead of indicating a problem with any one group, this pattern points how the backbone organization defines success, supports measurement, or holds everyone accountable to expectations around data sharing. Responses might include clearer expectations (like a working group participation agreement with members), improvements to data infrastructure, or additional funding to help partners meet reporting requirements.

Let’s take a look at another domain, “Communication.” In the example, most of the groups show a mix of ratings, which signals disagreement about how well the standards are met within the groups. In addition to additional resources around agendas, decision-making, and follow-up, it might be useful to highlight the coalitions where everyone agrees the standards are being met. In addition to providing tools for agendas, decision-making, and follow-up, leadership can also look for places where there is strong alignment. Those groups become sources of learning for the network. This might take the form of a peer-led panel highlighting effective practices or small stipends for chairs to mentor others. Remember, the goal is not to compare groups, but to recognize what while the backbone staff can provide structure and resources, working group members are experts in their own experience. Often, the most meaningful insights are communicated best through peers.

With this interpretation, the cross-coalition summary becomes a tool for stewarding the system rather than managing individual groups. It helps leadership see where the structure of the network needs to change, where additional support is required, and where learning already exists inside the community. When patterns guide decisions, the work shifts from reacting to problems to intentionally building the conditions that allow collaboration to thrive.

Turning Patterns into Action

The most important takeaway is this:

Instead of looking for what’s broken, look for where support would make the biggest difference. Don’t ask which groups are failing. Instead ask what kind of support the network needs right now.

Coalition health data is most powerful when it guides how attention, time, and resources are allocated. It helps leadership see where facilitation would be most helpful, which chairs would benefit from coaching, where goals need more clarity, when leadership structures should be adjusted, and how data and accountability practices can be strengthened across the network.

Because the data is triangulated across chairs, members, and staff, these decisions are grounded in patterns rather than individual perspectives. Leaders are not reacting to a single story, but responding to what the system is showing them.

Protecting Trust While Using Data

Important caveat! Data only works when people trust how it is used. This means working group scorecards are used for reflection and learning, not ranking. System-level summaries are used for strategy and support, not blame. There needs to transparency about what the data will and will not be used for.

This kind of trust also requires attention to power, equity, and community voice. Resources like Why Am I Always Being Researched? from Chicago Beyond, Urban Institute’s Elevate Data for Equity project, and the Data Equity Framework from We All Count offer practical guidance for designing data systems that are transparent, reciprocal, and grounded in shared responsibility rather than extraction (all linked below!).

When groups see that honest feedback leads to engaging facilitation, clearer expectations, and real support rather than punishment, they become more open over time. That openness is what makes learning, adaption, and improvement possible.

Why This Matters

Complex systems and networks rarely struggle because people do not care. They struggle because no one can clearly see how the network is actually functioning.

By combining scorecards, triangulated perspectives, and continuous feedback loops, this data makes collaboration visible in a way that supports better decisions and more human-centered leadership. It created a shared understanding, surfaces where the work needs support, and helps leaders care for both people and the structures that make collective work possible. This is how collaboration becomes

This is how collaboration becomes something leaders can steward with care and intention, and something people can rely on rather than just hope for.

Additional Learning Opportunities

Fist to Five Consensus-Building Tool explained by Civic Canopy. I have used this method in hundreds of meetings! If your group is use to a majority rules in decision-making, this can be a rough transition, but stick with it! This method really highlights the art of making the implicit explicit and how conversation can really strength the decisions made.

Masterful Meetings: Meeting Facilitation Training offered by Leadership Strategies. I completed this training a few years ago, and it opened my eyes to how, as the facilitator, the energy you bring into the space can really make or break the meeting.

Why Am I Always Being Researched? by Chicago Beyond.

I keep the pocket version of this report on my desk as a quick reference and remind of what’s really important. It is a powerful reframing toward respect, reciprocity, and shared ownership of knowledge.

Principles for Advancing Equitable Data Practice by Marcus Gaddy & Kassie Scott.

As a part of Urban Institute’s Elevate Data for Equity project, I appreciate that is starts in familiar territory, protections from Institutional Review Board (IRB) and the Belmont Report, then expands those concepts to include questions of power and equity. I find myself coming back to it whenever I’m thinking about designing data systems and tools that are technically solid, but also ethical and human-centered.

The Data Equity Framework from We All Count.

I love this framework because it reminds me data is not just numbers. It is a series of choices about what get collected, who is represented, and how information is used. It is a great tool for surfacing power and accountability in all data work.