Coalition Health Diagnostic & Governance Framework

Overview

Most community coalitions fail for the same quiet reasons. It is not a lack of passion or a lack of funding. It is the absence of shared accountability, usable data, and real decision-making infrastructure.

In 2016, my organization asked me a simple, but uncomfortable question:

Are our working groups actually functioning as a collaborate engines for change, or are we just meeting a lot?

I created a tool that gave both working groups and leadership a consistent, evidence-based way to see where collaboration was working, where it was breaking down, and where intervention would have the highest leverage. I called it the Coalition Health Diagnostic Tool.

Challenge

At the time, the organization supported nine cross-sector working groups spanning education, health, and economic mobility. Each group had a volunteer chair, a staff liaison, a theory of change, and a full calendar of meetings.

What we did not have was a consistent way to measure:

whether the right people were at the table

whether decisions led to coordinated action

whether data was being used to guide strategy

whether anyone could clearly articulate progress toward results

Without that, leadership could not tell which working groups were thriving, which were drifting, and where intervention was needed.

Design Framework

I brought together proven collaboration frameworks into one practical model aligned with how the organization actually worked.

FSG Working Group Standards

I drew on How to Lead Collective Impact Working Groups from FSG, which provides evidence-based guidance on effective working group practices, including size, facilitation, and membership.

Results-Based Accountability (Turn the Curve Thinking)

Results-Based Accountability provided the structure for linking community-level results to specific strategies. It helped working groups use data to tell the full story, identify partners, and commit to actions that could actually move outcomes.

Team Collaboration Assessment Rubric (TCAR)

TCAR builds on decades of research into collaborative practice and has been refined and field tested by university researchers, evaluators, and practitioners. It provides a practical way to assess and strengthen how team think, decide, and act together.

Cross-Functional Collaboration and Team Science

A cross-functional team is a group of people who apply different skills, with a high degree on interdependence, to ensure the effective delivery of a common organizational objective. This body of research helped ground the framework in what makes diverse, multi-sector working groups actually perform. It informed how the model evaluates clarity of roles, leadership, communication, constructive conflict, and the group’s ability to coordinate action and adapt when strategies are not working. These were translated into five measurable domains of coalition performance (see below).

Product & Data Model

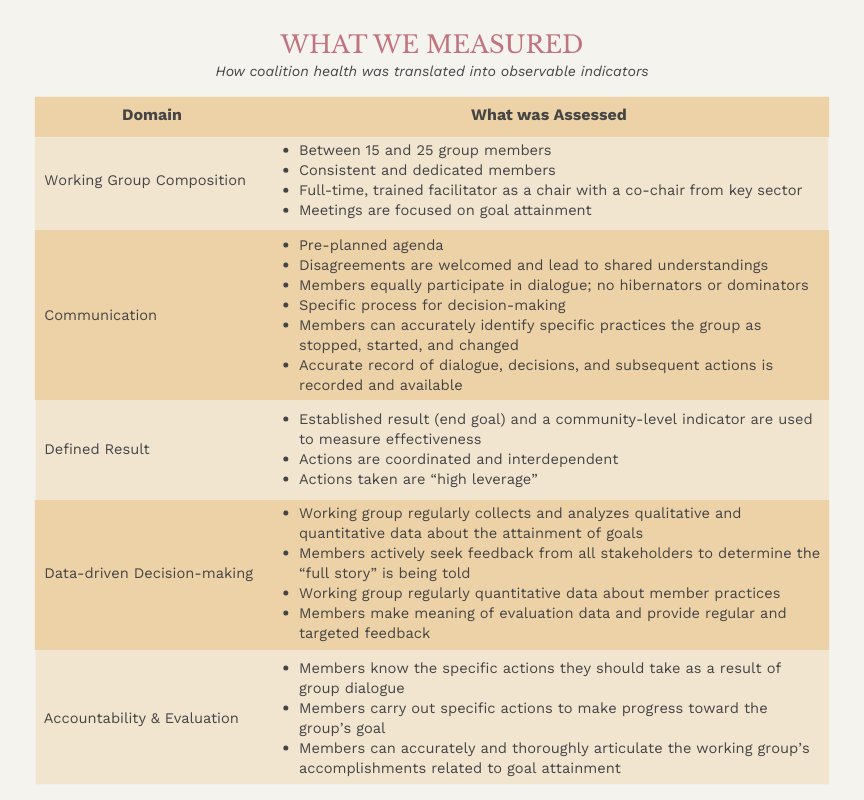

The Coalitional Health Diagnostic Tool translated the design framework into a structured, measurable system that produced actionable scorecards. Each of the five domains was operationalized through concrete, observable indicators rather than subjective impressions.

Data was triangulated across three distinct perspectives: working group chairs, working group members, and staff liaison. This ensured the diagnostic captured not just how a group saw itself, but how it was experienced by those facilitation and participating in the work.

Responses were synthesized into a single visual summary that showed:

where chairs, members, and staff agreed expectations were being met

where they agreed expectations were not being met

and where perceptions diverged, signaling misalignment, governance risk, or performance gaps.

This made it possible to distinguish between health disagreement, blind spots, and structural breakdowns, creating both a working-group view and a system-level view of coalition health across all nine working groups.

Outcome

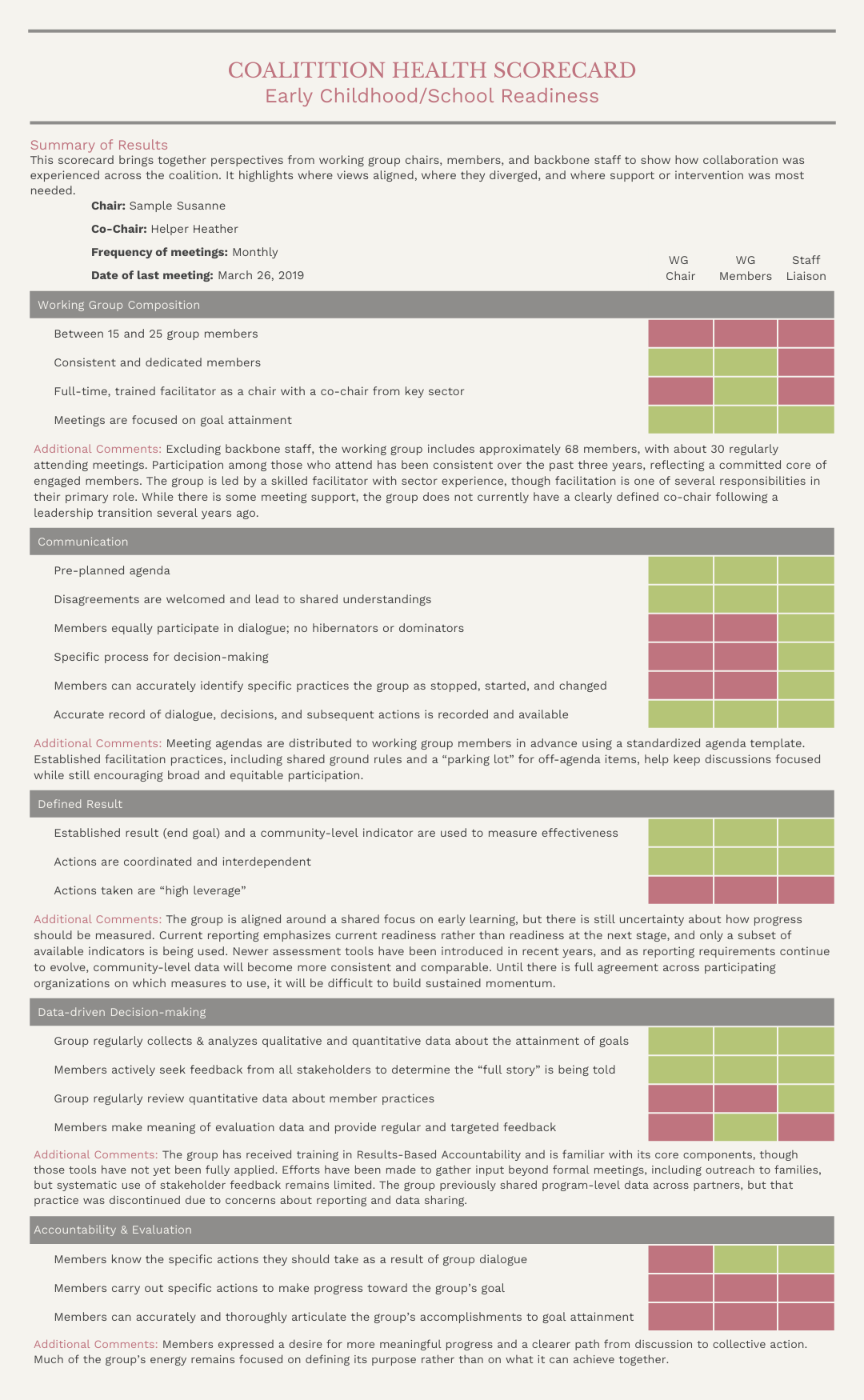

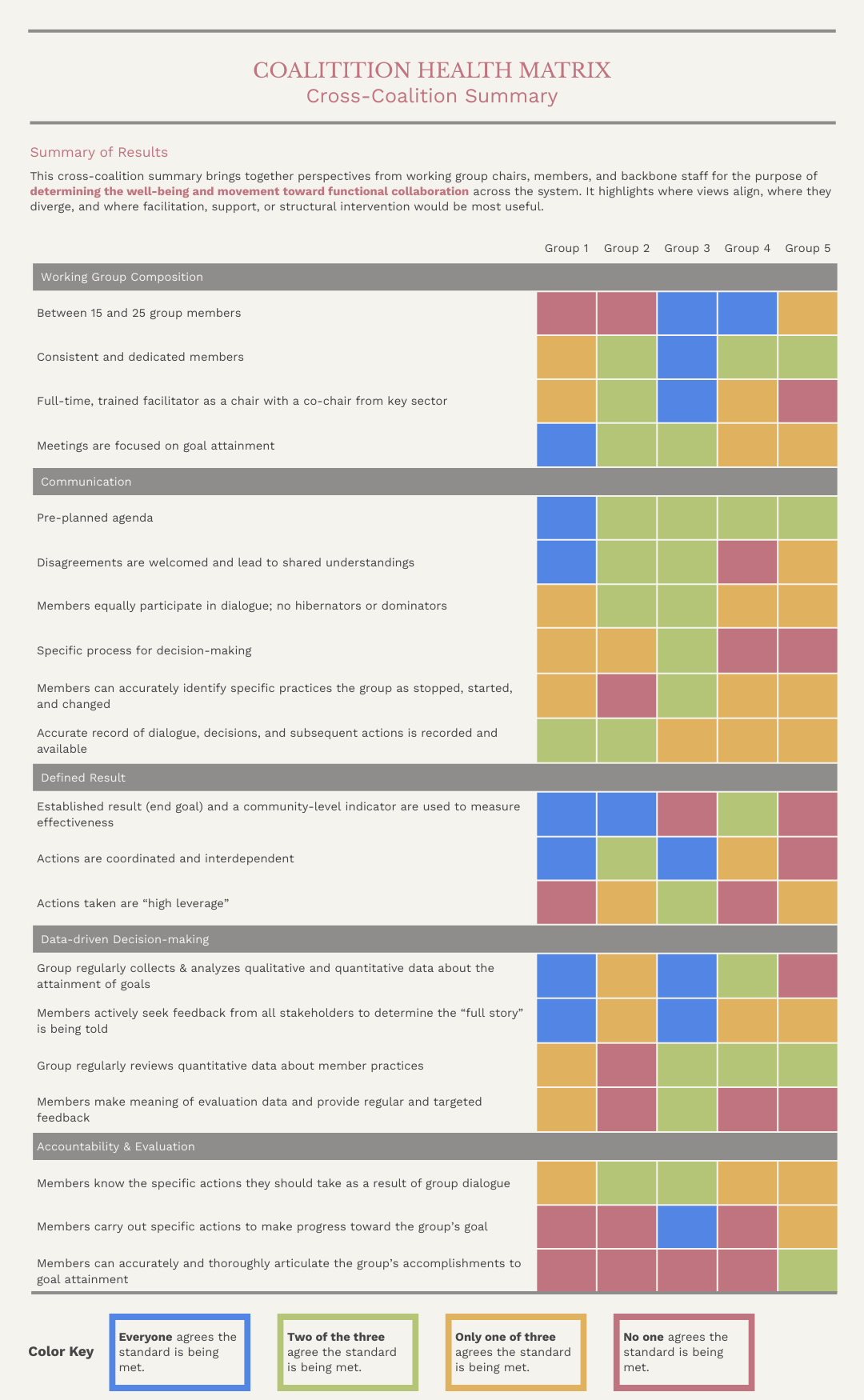

The Coalition Health Diagnostic Tool produced two complementary outputs: an individual working group scorecard and a cross-coalition summary view. Together, they provided a consistent way to understand how well working groups were functioning as engines for collective impact. The diagnostic’s dual outputs, individual group score cards and a system-level summary, were designed not just for visibility, but for strategic learning and continuous support.

“...based on triangulated perspectives from chairs, members, and staff to ensure collaboration was understood from multiple, lived perspectives.”

The individual working group scorecard gave each group a structured, non-judgmental snapshot of how it was functioning across the five coalition health domains. By combining ratings with qualitative context, the scorecard helped groups see their own strengths, blind spots, and areas where additional facilitation or alignment would be most useful. It created a concrete starting point for reflection and course correction rather than relying on informal impressions or personality dynamics. Below is an anonymized snapshot of how one working group experienced collaboration, based on triangulated perspectives from chairs, members, and staff to ensure collaboration was understood from multiple, lived perspectives.

The cross-coalition summary scorecard allowed leadership to see how collaboration was functioning across the entire system. Instead of treating each working group in isolation, they could compare patterns, identify common challenges, and prioritize where backbone support, facilitation, or structural changes would have the greatest impact.

Leadership could quickly see:

which coalition had strong facilitation, clear results, and coordinated action

which lacked shared accountability or meaningful use of data

and where targeted support or structural redesign was needed.

Together, these two views turned collaboration into something that could be discussed, supported, and stewarded over time. Instead of relying on anecdote or meeting attendance, leadership could see where collaboration was strong, where it was strained, and where intervention would have the highest leverage. The tools also created a shared language across working groups for what effective collaboration looked like, making it easier to set expectations, offer feedback, and support continuous improvement.

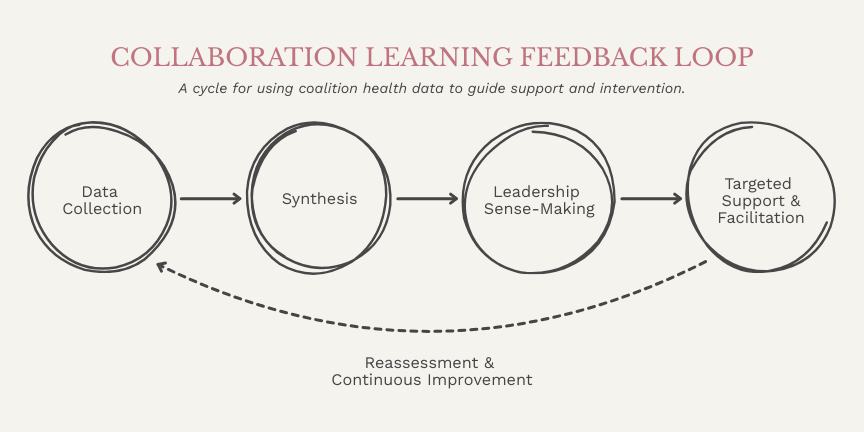

This cycle connects working groups, backbone staff, and leadership in a shared learning and support system. It links group experience, facilitation, and leadership decision-making so that feedback is translated into targeted support and continuous improvement over time.

Why It Matters

Coalitions sit at the center of some of the most complex work in communities. They bring together people from different institutions, sectors, and lived experiences to solve problems no single organization can solve alone. But without shared structures for accountability, decision-making, and learning, even well-intentioned collaborations can drift, stall, or unintentionally reinforce the very inequities they are meant to address.

The Coalition Health Diagnostic Tool made collaboration visible and governable. It gave leaders and facilitators a way to understand not just what groups were doing, but how they worked together, where responsibility was concentrated, and where support was needed. By grounding collaboration in data, dialogue, and shared expectations, the tool helped shift working groups from activity to collective action.

This same approach carries into my broader work in data infrastructure and systems design. Whether the system is a coalition, a partner network, or a data platform, the goal is the same: to build structures that turn shared purpose into coordinated action and measurable progress, while centering the people who do the work and the communities they serve.

Further Reading

This framework also draws on a broader body of research on collaboration and organizational effectiveness. For readers who want to explore the thinking behind it in more depth, these resources provide strong grounding for this work:

Uribe, Daniela, Carina Wendel, and Valerie Bockstette. How to Lead Collective Impact Working Groups. FSG.

Friedman, Mark. Trying Hard Is Not Good Enough: How to Produce Measurable Improvements for Customers and Communities. Santa Cruz, CA: FPSI Publishing, 2005.

Mattessich, P. W., Murray-Close, M., Monsey, B. R., and Monsey, P. B. The Wilder Collaboration Factors Inventory: Assessing Your Collaboration’s Strengths and Weaknesses. Saint Paul, MN: Fieldstone Alliance, 2001.

Woodland, R. H., and Hutton, M. S. “Evaluating Organizational Collaborations.” American Journal of Evaluation 33, no. 3 (2012): 366–383.